Now, when we win, we tend to win big - about a quarter of the time, we'd get 53 or more correct. That would net us at least a 49% profit. The flip-side of that is, a quarter of the time we get 46 or fewer and lose a quarter of our bankroll. There's a one in twenty chance that we'll lose half of our bankroll (although one in ten that we'll double it). With bigger edges or shorter odds, the fluctuations can be terrifying.

Is there a way to reduce them? Well, obviously, if you don't bet so much, your bankroll is steadier. But let's say you're still pretty greedy, and want to maximise your worst plausible outcome.

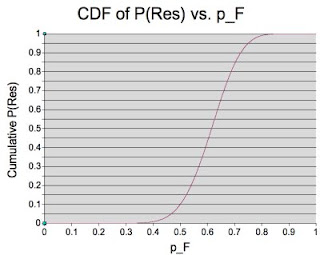

How do you even define that? Well, given that we're looking at a binomial distribution, we can use stats to help us. If we look at N identical bets with probability p, we know that 97.7% of the time* we'll win at least Wmin = Np - 2 sqrt(Np(1-p)) of them. Bumping up the 2 to 3 gives us 99.87% confidence.

Whatever value we choose - I'm happy enough with two - outlines our worst plausible set of results over N trials**. We can then calculate our worst plausible outcome, which is B0 (1 + k(o-1))Wmin(1 - k)(N-Wmin).

The trick now is to maximise this with respect to each k. It turns out, if we define p* as Wmin/N, that our optimal Kelly stake in this sense is (p*o-1)/(o-1). And if it's less than zero, we don't bet.

This is quite restrictive - in the case above, with N = 100 we simply wouldn't bet - p* is 40%, far too low to allow us to meet our minimum. N = 1000 isn't that much better - p* = 46.8%, where we need 47.6%. N = 2500 is just about enough.

Here are the results of running 2500 bets 1000 times over (using the two staking patterns on the same events):

Pure Kelly Modified

Stake 4.55% 0.73%

AROI*** 26.07% 3.81%

SD 64.52% 23.58%

Worst -79.85% -11.44%

So, on average, Kelly outperforms the modified version by some way - but at the cost of much higher risk. The modified stakes 'guarantee' that the lowest plausible value is as large as possible.

It is possible to make up the discrepancy to a fair degree by increasing N, because the larger N is, the closer p* is to p (the square root term ends up getting very small).

Modified Kelly staking is worthwhile for bets with sufficiently large edges, or over sufficiently long runs. If you plan to make only 100 bets, you would need odds of at least 2.5 on a 50-50 shot before the modified stakes allowed you to bet.

I just typed bed, which is probably a Freudian slip. It's getting late.

* Look it up in a normal distribution table.

** We needn't assume the bets are identical. In general, we can replace Np with sum(p) and the bit inside the square root would be sum( p(1-p) ). But that complicates things a bit more than we need for the proof of concept.

*** Average Return on Investment